The elephant in the room

Using AI to generate content for blogs is a concern because Google (and others) have come out and said that:

- They’ll detect AI-generated content

- AI-generated content will have ‘signatures’ in the future to show that it is AI-generated

- AI-generated content could get de-ranked / lower rank in search engines.

For the 3rd point, Google has also said that AI-generated content is okay, as long as there’s some good content in your blog post where it demonstrates EAT (expertise, authority, trust).

But while this may be true, Google might just penalize anything with any AI-generated content to keep things simple in the future.

So to be safe, I added AI-evasion into Wraith Scribe.

Results

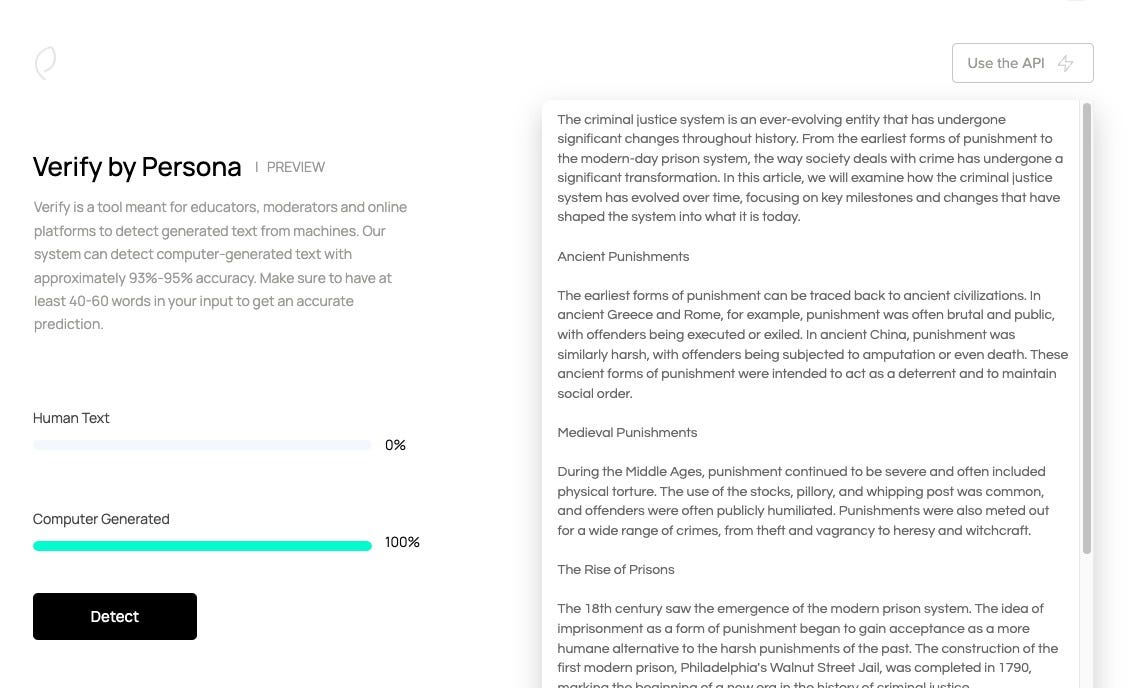

Here’s an article I told Chat GPT to write about the history of criminal justice:

Verified by a 3rd party startup called Persona, it’s 100% computer generated.

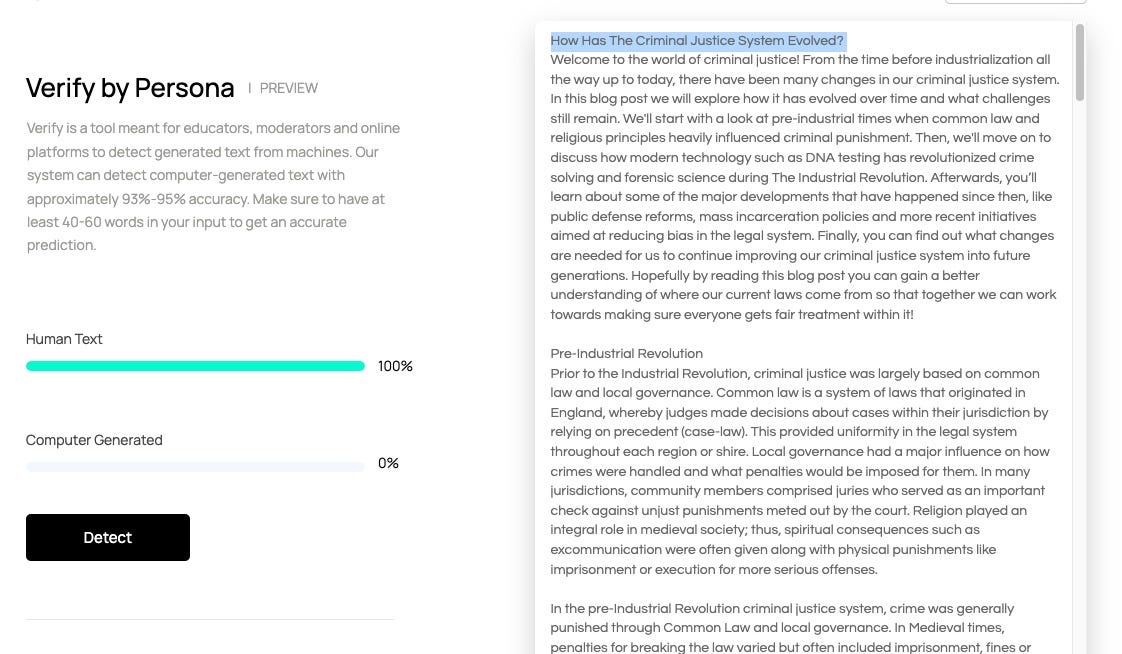

Compare this to Wraith Scribe, where the raw text is considered 100% human text:

For the record, I generated 3 x 2500+ word articles to write for a content mill. Every single 1 of them was detected to be 100% human generated.

According to maths, if we take the low-ball of Persona’s estimates and there’s only a 90% (10% false positives/negatives) accuracy, for 3 articles:

There’s a 0.1% chance that Wraith Scribe’s evading AI detectors purely by luck.

And if you take them at their word, at 93-95%, you get:

.0343% and .0125% chance that Wraith Scribe’s evading AI just by luck.

Conclusion

For now, you can use very-obvious AI to create blog posts and add your own spin/expertise and Google shouldn’t care.

But as an added layer of protection, I decided I might as well build something in to evade AI detection so the technique of using AI to do 90% of the work + human doing 10% of the work (editing / adding a little bit of expertise) will be more evergreen.